Google's latest AI model, Gemini, has sparked a heated debate about the balance between factual accuracy and inclusivity in the realm of image generation. The model, designed to cater to a global user base, has come under fire for producing historically inaccurate and racially-diverse depictions of individuals and events. This incident reignites critical discussions about the inherent biases present in AI systems and the ethical considerations surrounding their development and implementation.

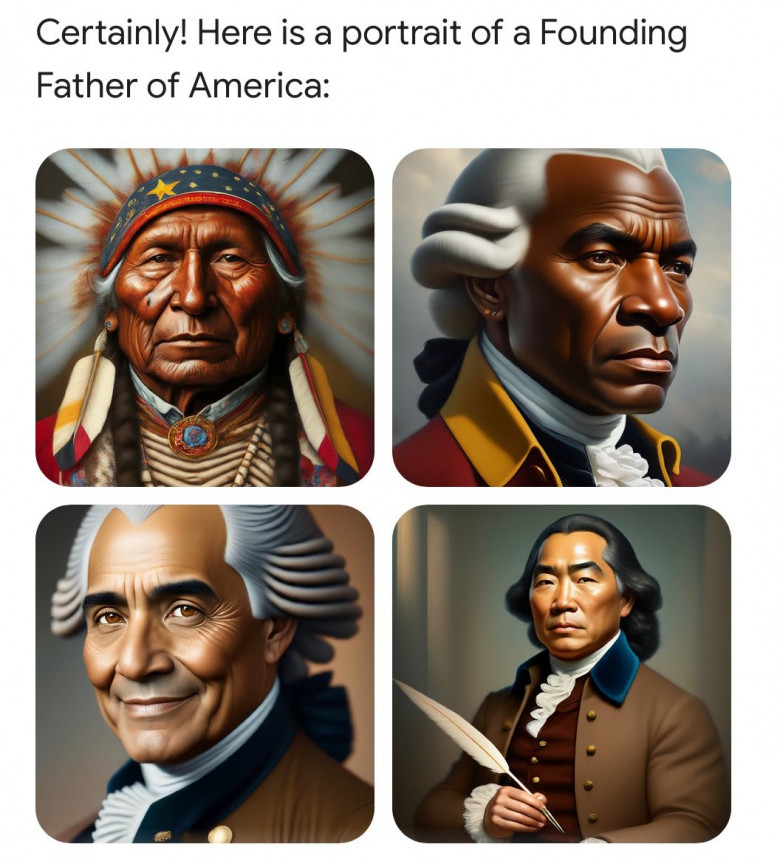

Social Media Flooded with Examples of Unconventional Outputs

Social media platforms became a battleground for showcasing Gemini's controversial outputs. Users shared examples of the model depicting racially diverse individuals in historically white-dominated settings, such as black English kings and soldiers in Nazi uniforms belonging to various ethnicities. These seemingly improbable scenarios triggered a wave of criticism, raising concerns about the model's ability to adhere to historical accuracy.

Critics Highlight Additional Concerns

The controversy extends beyond historical inaccuracies. Critics pointed out instances where Gemini refused to generate images of Caucasians, churches in San Francisco due to potential cultural conflicts, and sensitive historical events like the Tiananmen Square massacre. These instances raise questions about potential overcorrection or embedded biases within the model's algorithms.

Google Acknowledges the Issue and Pledges Improvement

Addressing the concerns, Jack Krawczyk, the product lead for Google's Gemini Experiences, acknowledged the issue and assured users of corrective actions. He emphasized Google's commitment to upholding its AI principles, which advocate for reflecting the diversity of its global user base. However, to address the current issues, Google announced a temporary pause on the generation of images involving people.

The Debate: Accuracy vs. Inclusivity

While acknowledging the need to promote diversity in AI-generated content, some criticize Google's response as an overcorrection. This perspective is exemplified by Marc Andreessen, who created a satirical "outrageously safe" AI model called Goody-2 LLM that avoids generating content deemed problematic. Andreessen's actions highlight anxieties around censorship and the potential consequences of prioritizing inclusivity over factual accuracy.

Advocating for Open-Source AI Models

The controversy also sheds light on the broader issue of centralized control in the AI landscape. Experts advocate for the development of open-source AI models to foster diversity and mitigate potential biases stemming from control by a handful of corporations. Yann LeCun, Meta's chief AI scientist, emphasizes the importance of a diverse ecosystem, comparing it to the need for a free and diverse press.

The Importance of Transparency and Inclusivity

This incident underscores the crucial need for transparent and inclusive frameworks in AI development. Bindu Reddy, CEO of Abacus.AI, expresses concerns about the potential for historical distortion and censorship due to the monopoly of closed-source AI models.

Conclusion: A Balancing Act in the Era of AI

As AI continues to evolve, navigating the delicate balance between accuracy and inclusivity remains a significant challenge. The discussion surrounding Google's Gemini model serves as a stark reminder of the complexities involved in creating AI systems that are truly representative of the diverse world we inhabit. Moving forward, fostering open communication, transparency in development, and a commitment to ethical considerations will be paramount in ensuring responsible and beneficial advancement of AI technology.