OpenAI, the leading artificial intelligence research organization, has announced the general availability of GPT-4 Turbo with Vision API, a powerful tool that combines advanced language and vision capabilities. The new model offers significant improvements over the previous version, including a larger context window, multimodal capabilities, and a more affordable price point. The new GPT-4 Turbo model is available for all paying developers to try by passing 'gpt-4-1106-preview' in the API. The stable production-ready model is expected to be released in the coming weeks. The new model is also available to paid ChatGPT users, with improved capabilities in writing, math, logical reasoning, and coding. The new GPT-4 Turbo model offers a 128K context window, which is significantly larger than the previous version's 8K context window. This allows the model to handle more complex and nuanced language tasks, including writing longer documents, summarizing lengthy texts, and analyzing large datasets. The new model also supports multimodal capabilities, including vision, image creation, and text-to-speech. This means that developers can use the API to build applications that can process and analyze images, videos, and audio files, as well as text. This opens up a wide range of potential use cases, including image recognition, video analysis, and voice-enabled applications. One of the most significant improvements in the new GPT-4 Turbo model is its affordability. OpenAI has reduced the cost of the API by 90%, making it more accessible to developers and businesses of all sizes. This is part of OpenAI's commitment to democratizing access to advanced AI capabilities and making them available to a wider audience. The new GPT-4 Turbo model is built on the same foundation as the previous version, which has been trained on a vast dataset of text and code. This means that it has knowledge of world events up to April 2023 and can perform a wide range of language tasks, including text generation, summarization, translation, and analysis. However, some users have reported issues with the model's cutoff date, which may not be aligned with the most current data by default. This means that developers may need to update the model's training data to ensure that it has the most up-to-date information. Despite this issue, the new GPT-4 Turbo model is a significant step forward in the field of artificial intelligence. It offers advanced language and vision capabilities that can be integrated into a wide range of applications, from chatbots and virtual assistants to content management systems and data analytics platforms. The new GPT-4 Turbo model is also a testament to OpenAI's commitment to innovation and excellence in the field of AI. The organization has been at the forefront of AI research and development for several years, and its products and services are used by millions of people around the world. In conclusion, OpenAI's GPT-4 Turbo with Vision API is a game-changer for developers and businesses. The new model offers advanced language and vision capabilities, a larger context window, multimodal capabilities, and a more affordable price point. It is a powerful tool that can be used to build a wide range of applications, from chatbots and virtual assistants to content management systems and data analytics platforms. Despite some issues with the model's cutoff date, the new GPT-4 Turbo model is a significant step forward in the field of artificial intelligence, and it is sure to have a major impact on the way we build and use applications in the future.

OpenAI Unveils GPT-4 Turbo Alongside Vision API for Public Use

The company aims to empower Developers with Advanced Language and Vision Capabilities at an Affordable Price

By Admin on April 15, 2024

Latest

Admin

May 30, 2024

The Automated Vehicles Act sets new standards for safety and innovation in the world of self-driving cars

Admin

May 29, 2024

xAI's Flagship Chatbot Grok Sets Sights on Transforming Conversational AI

Admin

May 24, 2024

Exploring the Implications of Samsung's Multi-Million Dollar Investment in AI Software

Admin

May 23, 2024

Satya Nadella's keynote showcases the power of GPT-4 and Azure AI in revolutionizing industries and empowering people worldwide.

Admin

May 22, 2024

Exploring Dell's Latest AI-Driven Technologies and Their Transformative Impact on Business Operations Across Healthcare, Finance, Manufacturing, and Retail Sectors

Recommended For You

Meta Unveils Innovative AI-Powered Advertising Tools

AI-Powered Advertising Tools

October 2023

SnapCalorie: AI-Powered Food Tracker Uses Photos to Estimate Calories

New app uses computer vision to identify and measure food in images

June 2023

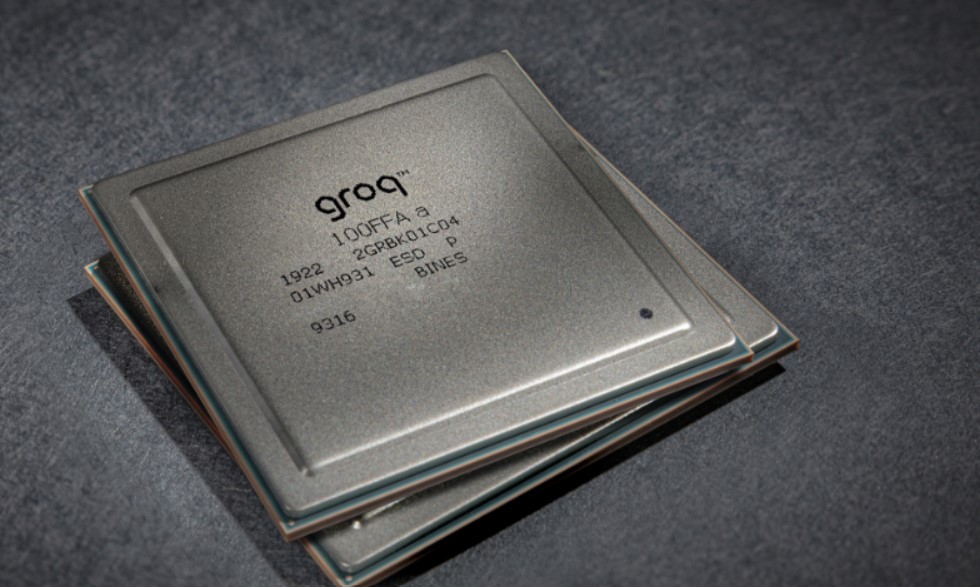

AI chip startup Groq forms new business unit, acquires Definitive Intelligence

March 2024

Stability AI's DreamStudio: An Open Source AI Design Studio for Everyone

The move aims to foster a community-driven development of generative AI tools

June 2023