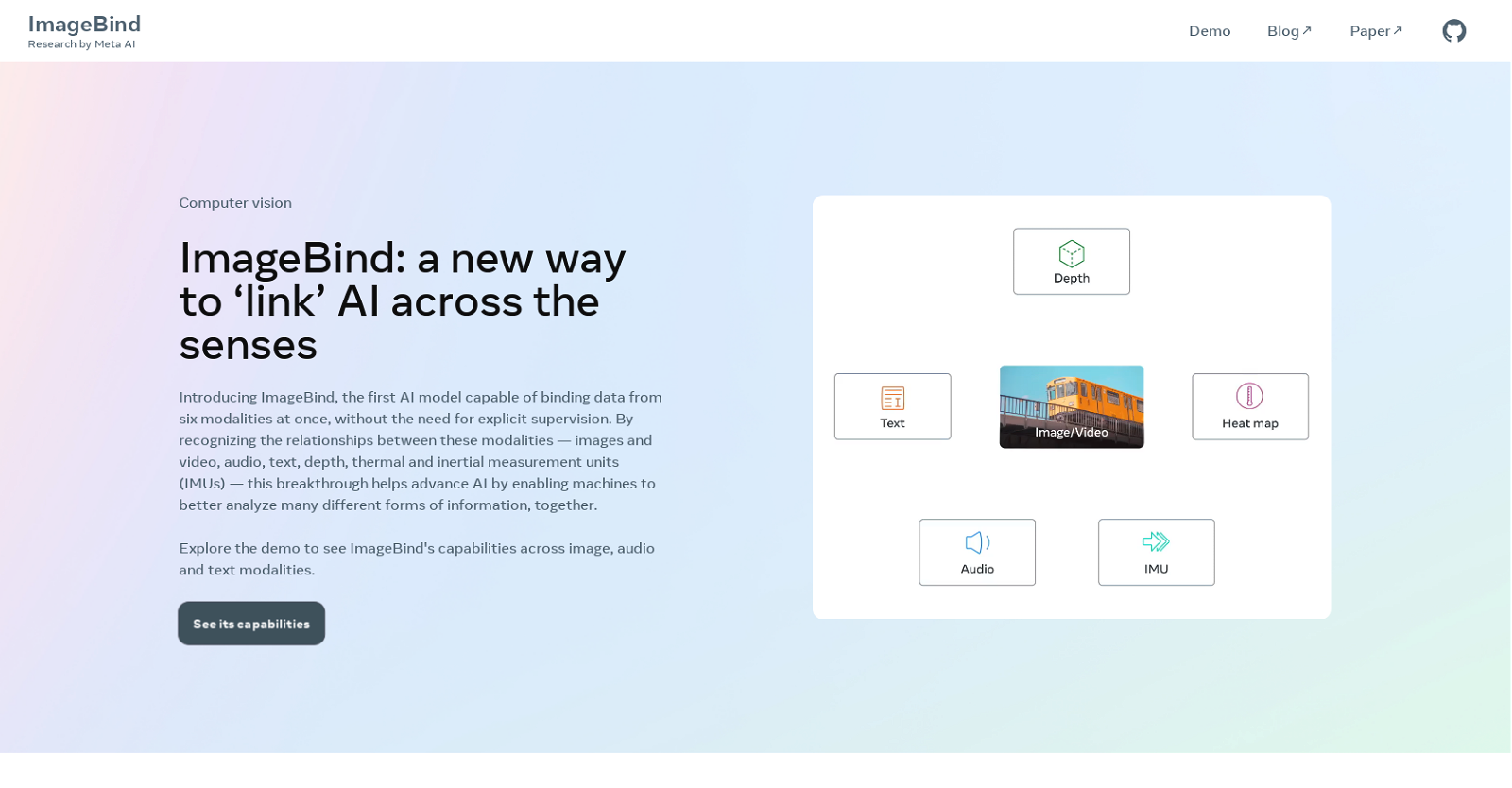

ImageBind is an open-source AI tool developed by Meta that can learn from and link together six different types of data text, images, audio, 3D measurements, temperature data, and motion data. This allows ImageBind to create a single embedding space that binds multiple sensory inputs together, without the need for explicit supervision.

This makes ImageBind a powerful tool for a variety of applications, including

Cross-modal search ImageBind can be used to search for images, videos, or audio files based on a text description. This could be used to find images of a specific object, find videos of a particular event, or find audio recordings of a certain song.

Multimodal arithmetic ImageBind can be used to perform mathematical operations on different types of data. For example, you could use ImageBind to calculate the distance between two objects in a photo, or to convert a temperature reading from Celsius to Fahrenheit.

Cross-modal generation ImageBind can be used to generate new content from existing content. For example, you could use ImageBind to create an image based on a text description, or to generate a sound based on an image.

ImageBind is still under development, but it has the potential to revolutionize the way we interact with computers. By allowing machines to better understand and process multiple types of data, ImageBind could make it easier for us to find, use, and create content.

Keywords imagebind, ai, meta, cross-modal search, multimodal arithmetic, cross-modal generation, machine learning, artificial intelligence, multimodal learning, sensory data, embedding space